Putting the future of artificial intelligence to the test (Exploring GPT-3)

The title and introduction paragraphs was written entirely by GPT-3.

There are many ideas about the future of artificial intelligence. The capabilities of computer systems are rapidly increasing at an exponential rate, and with that comes the question of how far they will go. There are many advancements that are taking place in computer science, with natural language processing being one of the most promising.

For example, natural language processing uses computer algorithms to find patterns in language, similar to how humans process language. These patterns are how computers derive meaning, and this technique can be used to accomplish almost any task. One reason for the prevalence of natural language processing is because many of the tasks computers are able to perform require complicated equations or large datasets, both of which are easier to analyze than language.

While the future of artificial intelligence is unclear, advancements in natural language processing combined with other technologies will inevitably lead to incredible discoveries.

When I first heard about GPT-3 last July, I was skeptical. I had spent the past few days exploring GPT-2 and found it cool, but impractical. I couldn’t understand how a gimmicky black box model could ever be useful in practice.

GPT-3 is completely different from its predecessor. It is apparent that the developers put way more work here in addition to data and compute. GPT-3 actually generates useful information, relevant text and also does a much better job filtering out offensive notions and general nonsense. I’ve seen it being used various ways in the industry – from content generation to an out of the box zero shot learning set up for other NLP tasks.

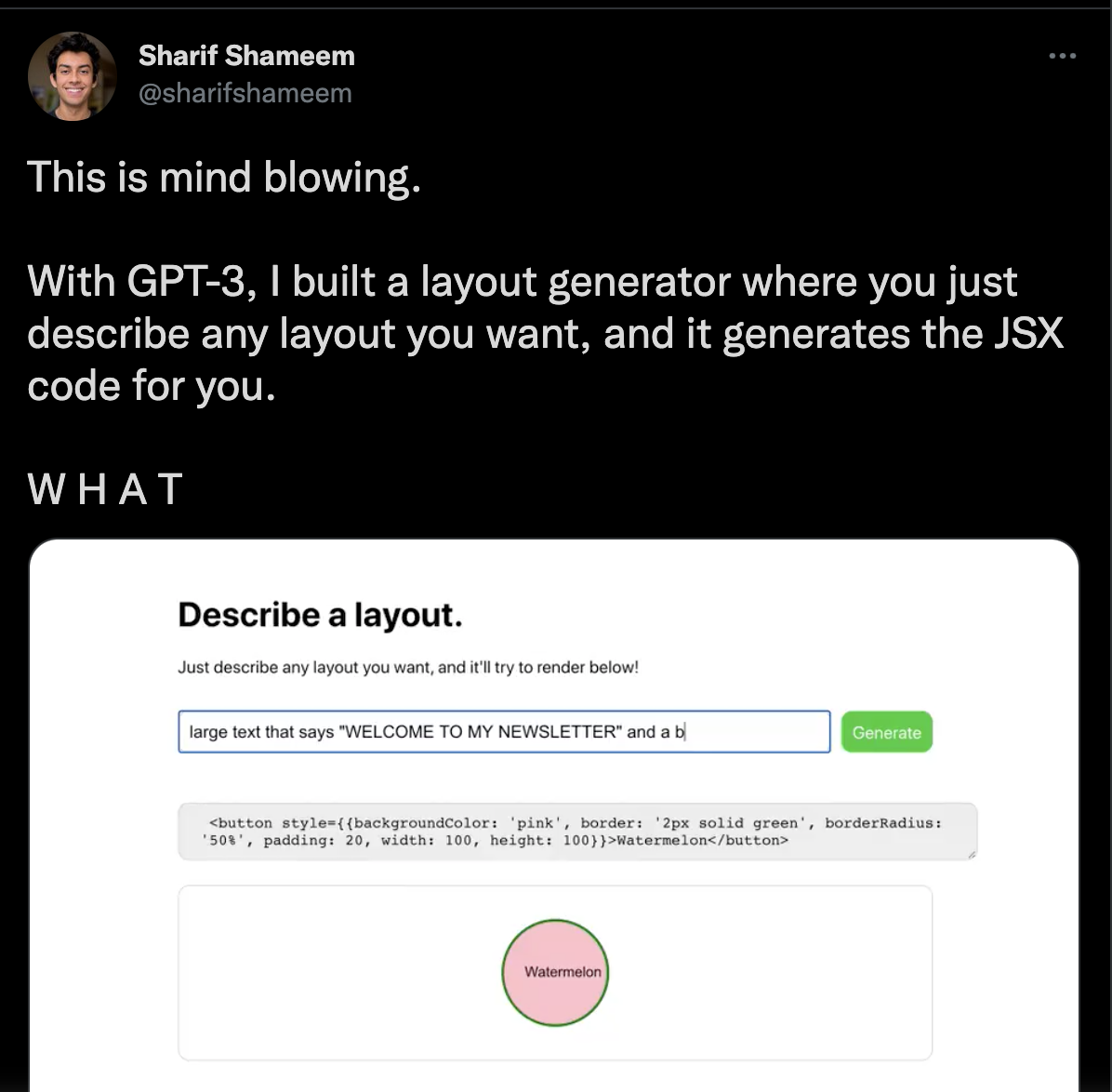

GPT-3 is an autoregressive language model that uses deep learning to produce text similarly to humans. There are numerous examples circulating the internet showing the cool things it can do. Here, GPT-3 is used to translate plain English description to working Javascript code.

In my earlier example, I instructed GPT-3 to write a catchy title and introduction for this post using the davinci-instruct-beta model (the instruct models are fine tuned to treat prompts as instructions). This was the instruction I provided:

Write an article talking about the future of natural language processing and artificial intelligence, with a focus around advancements. Make the opening sentence catchy and include a title.

Honestly, the results were really good. I’m quite impressed.

I put together a quick Python library to simplify GPT-3 priming. Priming is just a fancy way of saying “finding the right prompts to improve subsequent model predictions”. In this library, the prompt is constructed using “instructions” and “examples”, both of which can be added or removed.

GPT-3 generally does very well even with short instructions and a few examples of your intended use case. Examples are typically delimited based on input and output. For instance, GPT-3 can be used to predict food ingredients based on the following prompt:

Given the name of a food, list the ingredients used to make this meal.

Food: apple pie

Ingredients: apple, butter, flour, egg, cinnamon, crust, sugar

Food: guacamole

Ingredients: avocado, tomato, onion, lime, salt

To gain access to the GPT-3 API, you have to join the waitlist. I applied to this list last September and never got a response. I applied this Monday and got the access the next day.

Here’s what I did differently:

- Used an organization email address instead of my personal email

- The first time I was honest and said I wanted explore the capabilities of GPT-3 without any projects in mind; this time, I thought carefully about applications

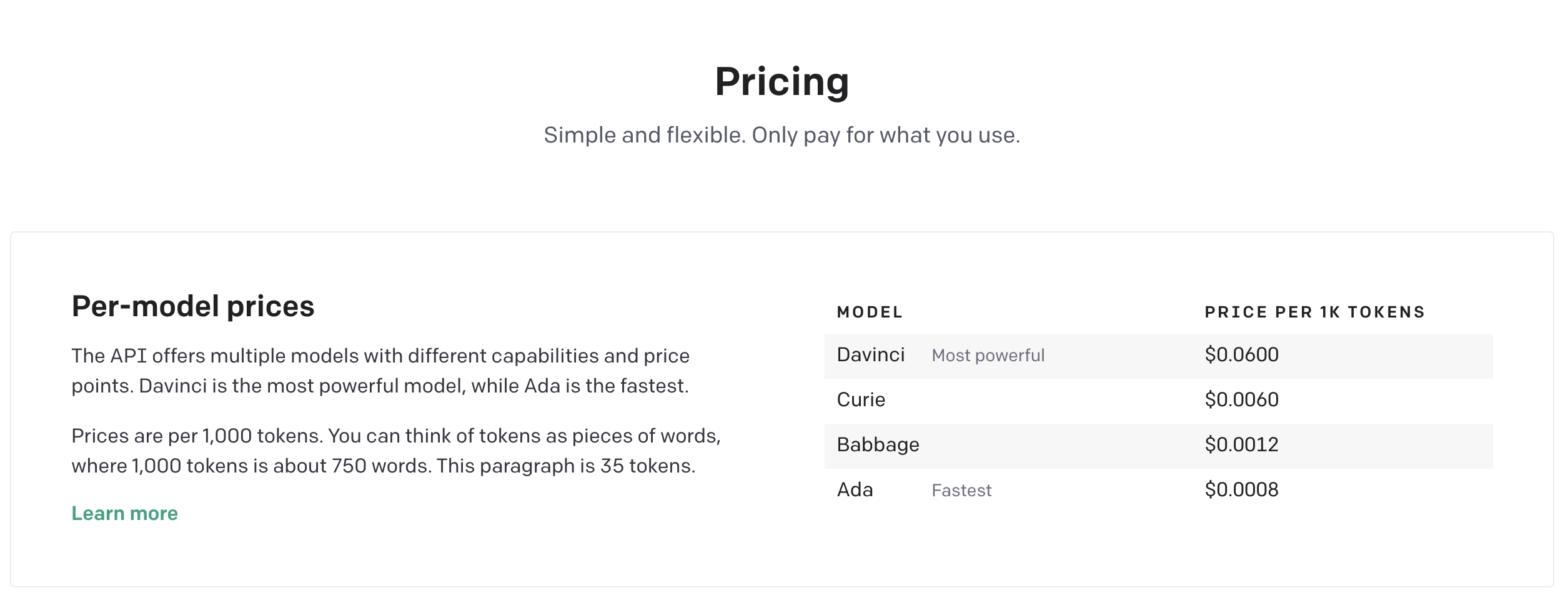

I am excited to start building some apps, but the service is not cheap.

Another concern with such a powerful language generation model is how it handles individual privacy. Can it be primed to return phone number or address look up? There’s an article on the Google Blog that explored these concerns using GPT-2. TLDR; 600/1800 sequences were memorized from the public training data which covered a wide range of topics including news headlines, log messages, JavaScript code and PII.

In any case, I am excited to spend more time exploring this API. Instead of wrapping this post up with a grandiose conclusion, I’ll let GPT-3 finish this one :)

With the progress in NLP, there are more possibilities in the future. Society will soon start to flourish in its potential too.